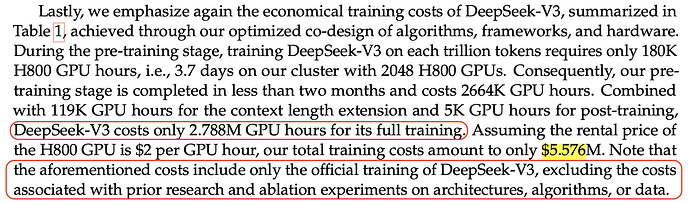

There is a table totaling the GPU count.

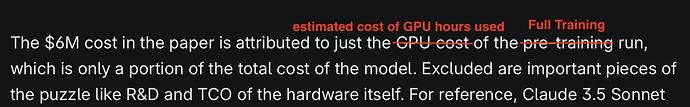

Many errors in SemiAnalysis article e.g.

IMHO, can’t get facts right… no need to take SemiAnalysis seriously.

.

Two points raised by Chamath in all-in podcasts…

One huge issue with NVDA chips is it requires expensive cooling systems. It means data center built with NVDA chips requires huge amount of energy. This is bad. Remember INTC is being replaced by ARM?

Look for a startup that can come up with a lower power chip excels at inference.

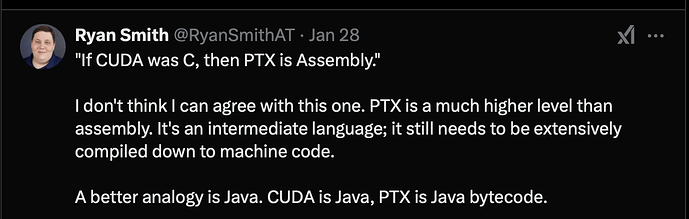

I saw some tech guy post about PTX being low level language and CUDA high level language. Both are not comparable.

.

That’s correct. DeepSeek shows it is worthwhile to do PTX since it saves $$$. Why spend so much $$$ in chips, energy and personnel? DeepSeek has done the heavy plumbing, copy the source code and modify for your specified use case.

Nvidia sales to Singapore. Where did those chips go?

![]()

Hint: to power 20% of Nvidia annual shipment Singapore would need to build multiple nuclear plants every year. Don’t think Singapore is doing that. Which country is building an insane number of power plants?

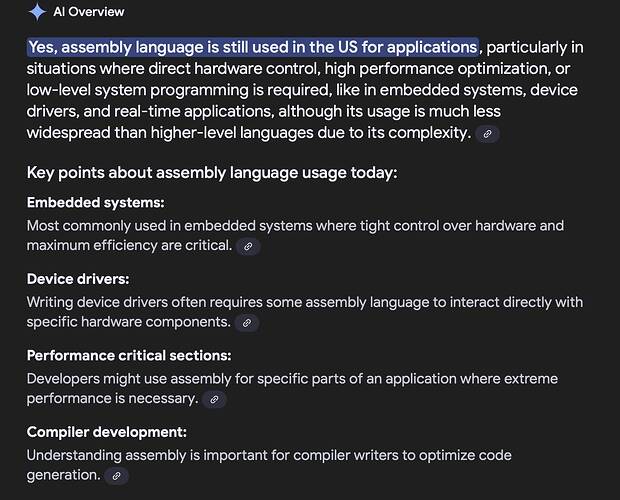

PTX is one level lower than CUDA. It’s like saying people coding in assembly is going to make C obsolete. Higher level languages exist for a reason. Programming in assembly takes a lot more engineering resources. In the US engineering resources are better spent on pushing the frontiers.

The H800 is also a very lamely handicapped product. Nvidia just did the bare minimum to pass the US sanction requirement. H800 is every bit the same as H100 except bandwidth is kept artificially low. Deepseek guys just go down to PTX level to manage intrachip communication more efficiently. Basically just pass smaller chunks of data more frequently instead of doing it in one big bang after computation.

One point I didn’t see anyone brought up is that ChatGPT o1-mini level, aka Deepseek level performance is NOT the end of the road for AI. If it were then we can say Nvidia has big problem. We are just getting warmed up. People keep talking about replacing millions of workers with AI. Has it happened yet? No. So we are not even close. Developing that level of AI will take many more years of Nvidia GPU investment. Yesterday’s new model from OpenAI already beat Deepseek r1.

We need to do a better job cutting China off. That means closing the Singapore loophole but also needs to cut China off from our software. Sanctioning hardware alone is not gonna make it.

I look forward to the day when phone trees are replaced with AI that can intelligently answer your question and if necessary direct you to the right human without 10 minutes of pressing keys and holding. Just give me that.

Can you tell the difference between a H100 vs H20 from a 2D image?

For those who don’t understand the use cases of assembly language… CS101. Clever use of assembly language for specific area increase performance dramatically, cost less in terms of GPU time usage and actually less overall development time. Ofc, may not be implementable with sub-par SWEs who already have trouble coding in C/C++.

.

David He could be scamming author with H20s passing as H100s.

Chamath on Stargate.

…

Shorter version

…

Facts vs myths (by 4 nerds, not random bloggers or media or self-interest CEOs)

…

Nvidia this week has described DeepSeek as “an excellent AI advancement,” while Trump has said it should be a “wake-up call” for U.S. industries.

Believe in these guys or some random bloggers?

CRWD

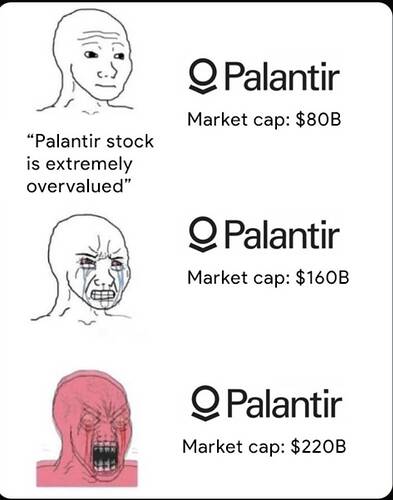

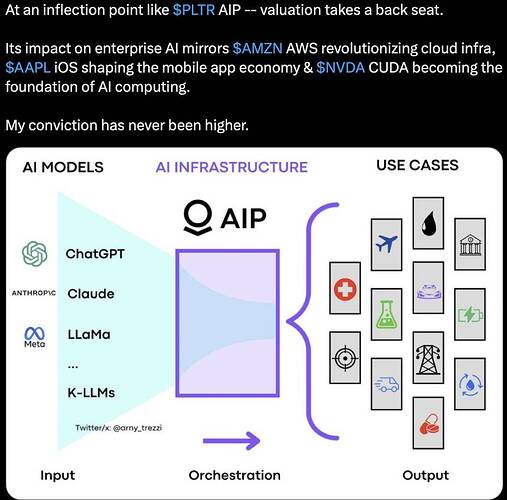

PLTR

META

TSM

…

Feb 4, 2025

![]()

Thinking Aloud: Still got people don’t understand PLTR and think is not an AI stock?

…

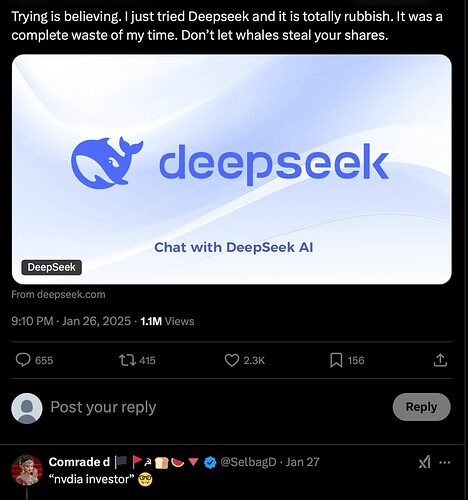

I was assured Deepseek would hurt Nvidia because everyone would just program in PTX to make better use of old Nvidia chips.

But then Google just announced today in their earnings they will increase 2025 capex from 59B to 75B.

Google engineers don’t know how to program? Maybe they should learn from random Twitter accounts.